Knowledgeable Neural Conversational Models

At the MALL lab in the Indian Institute of Science I am worked on KNCM, a project supported by Google research: How can we use background world knowledge to build more engaging and interactive conversational AI agents? Current conversational systems are primarily driven by user queries, which leads to monotonous and non- interesting conversations. A system which can gauge user interests and respond accordingly will be an ideal chat partner. We believe that real-world knowledge and context awareness – traits missing from existing conversational systems hold the key to achieving this goal. We therefore propose Knowledgeable Neural Conversation Model (KNCM), a novel system capable of harnessing the power of Knowledge Graphs for smarter conversation.

KNCM’s core component is a mechanism to build conversation context. The conversation context will consist of two components: the knowledge context, and the discourse context. Wemaintain the knowledge context by maintaining a distribution over parameterized knowledge graph nodes and edges.

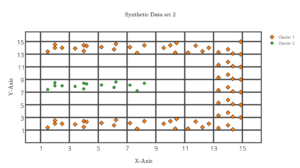

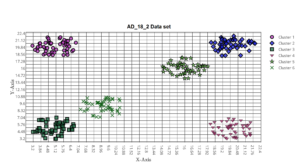

Hyper Cube Based Accelerated- Density Based Spatial Clustering For Applications with Noise

Density based clustering has proven to be very efficient and has found numerous applications across domains. The Density-Based Spatial Clustering of Applications with Noise (DBSCAN) algorithm is capable of finding clusters of varied shapes and is not sensitive to outliers in the data. In this paper we propose a new clustering algorithm, the HyperCube based Accelerated DBSCAN(HCA-DBSCAN) which runs in O(n log n) bettering the O(n2) complexity of the original DBSCAN algorithm without compromising on clustering accuracy. While DBSCAN can achieve the same complexity when spatial indexing is used, that complexity again grows to O(n 2) for higher dimensional data; which does not happen with our approach. We use a combination of distance based aggregation by overlaying the data with customized grids and use representative points to reduce the number of comparisons. Experimental results show that the proposed algorithm achieves a significant run time speed up of upto 52.57% when compared to other improvements that try to reduce the complexity of DBSCAN to O(n log n). Another significant improvement is that we eliminate the need for one of the two input parameters needed by the original DBSCAN algorithm thus making it more accessible to non expert users. The paper can be found here and the code for a proof of concept can be found on Github.

Intelligence Analysis of Tay Twitter Bot

This project was carried out at the Athena Research Center in Athens, Greece. The aim of this paper is to provide a framework to understand and analyse the intelligence of chat-bots. With the ever increasing number of chat-bots available, we have considered intelligence analysis to be a functional parameter to determine the usefulness of a bot. For our analysis, we consider Microsoft’s Twitter bot Tay released for online interaction in March 2016. We perform various natural language processing tasks on the tweets tweeted by and tweeted at Tay and discuss the implications of the results. We perform classification, text categorization, entity extraction, latent Dirichlet allocation analysis, frequency analysis and model the vocabulary used by the bot using a word2vec system to achieve this goal. Using the results from our analysis we define a metric called the bot intelligence score to evaluate and compare the intelligence of bots in general.

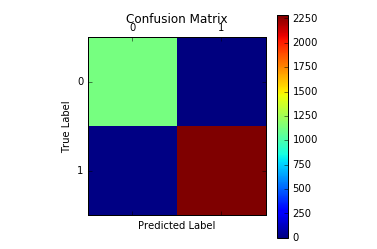

Deep Learning for Radio Telescopes

This project was under taken at the Berkeley Institute of Data Science, University of California Berkeley as part of the Astro Hack Week 2016.

Radio telescope clusters like the HERA are not able to detect in real time if one of the antenna has ‘gone bad’, this can be understood only after a lot of data processing, which requires both time and resources. Furthermore as the data is cross correlated all of the recorded data is deemed unusable. We are using Deep Learning to predict in real time if any one of the antenna belonging to the cluster are ‘bad’.

We were inspired by the Kaggle competition where whale sounds were encoded into images and a convolution net was used for classification. We use a similar approach and encode the data into images and use a deep convolution neural network for classification and prediction. We obtain an accuracy of 99.56% the ipython notebook for the project can be found on Github.

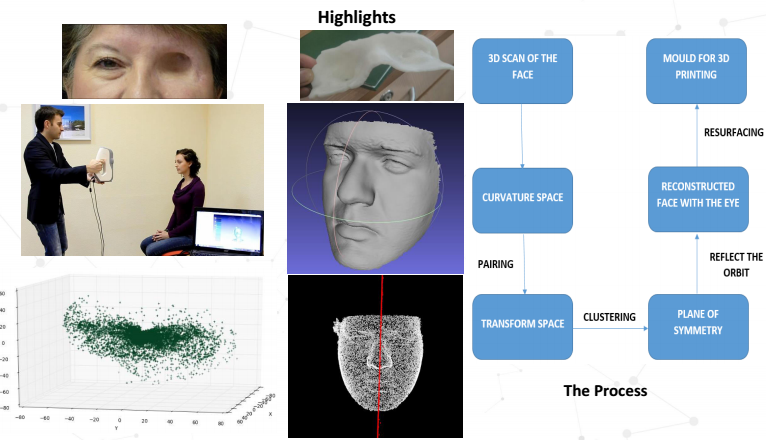

Prosthetic Eye Synthesis

This project was undertaken as a part of Massachusetts Institute of Technology’s healthcare bootcamp, ReDX: Rethinking Engineering Design and Execution.

Many patients have no option but to undergo orbital exenteration which aims at local control of disease invading the orbit that is potentially fatal or relentlessly progressive. After the exenteration, patients tend to lose their social confidence and a prosthetic eye aims to remedy this. Current techniques are cumbersome and time consuming. Can modern technology be used to make this process more efficient?

Our 3-D Scan based technique will reduce the cost and time of the whole process dramatically, but the most important factor is, it will reduce the inconvenience caused to the patient who has already suffered the trauma of losing an eye. For those patients who get a scan done before the exenteration, we will be able to reconstruct their original faces for them.

Our Software can detect the plane of symmetry in the point cloud of the face obtained from the 3D scan. Using this plane of symmetry we are able to reflect the ocular orbit and hence prepare the mould for the prosthetic eye which can easily be 3D printed.

We are proud to announce that our proposed method is being used at the L. V. Prasad Eye Institute in Hyderabad, India.

Code for the project can be found on Github a poster summarizing the project can be found here.

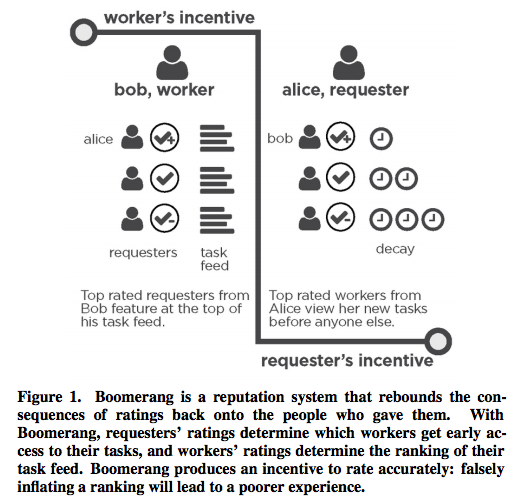

Stanford Crowd Research : Boomerang

Paid crowdsourcing platforms suffer from low-quality work and unfair rejections, but paradoxically, most workers and requesters have high reputation scores. These inflated scores, which make high-quality work and workers difficult to find, stem from social pressure to avoid giving negative feedback. We introduce Boomerang, a reputation system for crowdsourcing that elicits more accurate feedback by rebounding the consequences of feedback directly back onto the person who gave it. With Boomerang, requesters find that their highlyrated workers gain earliest access to their future tasks, and workers find tasks from their highly-rated requesters at the top of their task feed. Field experiments verify that Boomerang causes both workers and requesters to provide feedback that is more closely aligned with their private opinions. Inspired by a game-theoretic notion of incentive-compatibility, Boomerang

opens opportunities for interaction design to incentivize honest reporting over strategic dishonesty.

Smart Physio

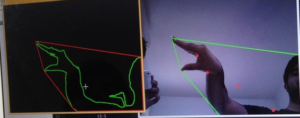

This project was carried out as part of MIT Media Lab’s Design Innovation Workshop. We wanted to make quality healthcare universal. We use the users laptop webcam to track the user as he performs his physiotherapy exercise. The algorithm checks in real time if the exercise being performed is in accordance with what has been instructed by the physician, if it finds an error it notifies the user immediately.

You can check out the various steps involved in building this project in an interactive format here. The code can be accessed on Github.